vocal-tools

High-precision vocal analysis pipeline built to solve a hearing disability

- Problem

- Can't reliably hear yourself sing — 60% hearing loss, right ear

- Solution

- Python pipeline measuring 70+ vocal metrics at 44.1 kHz

- Proof

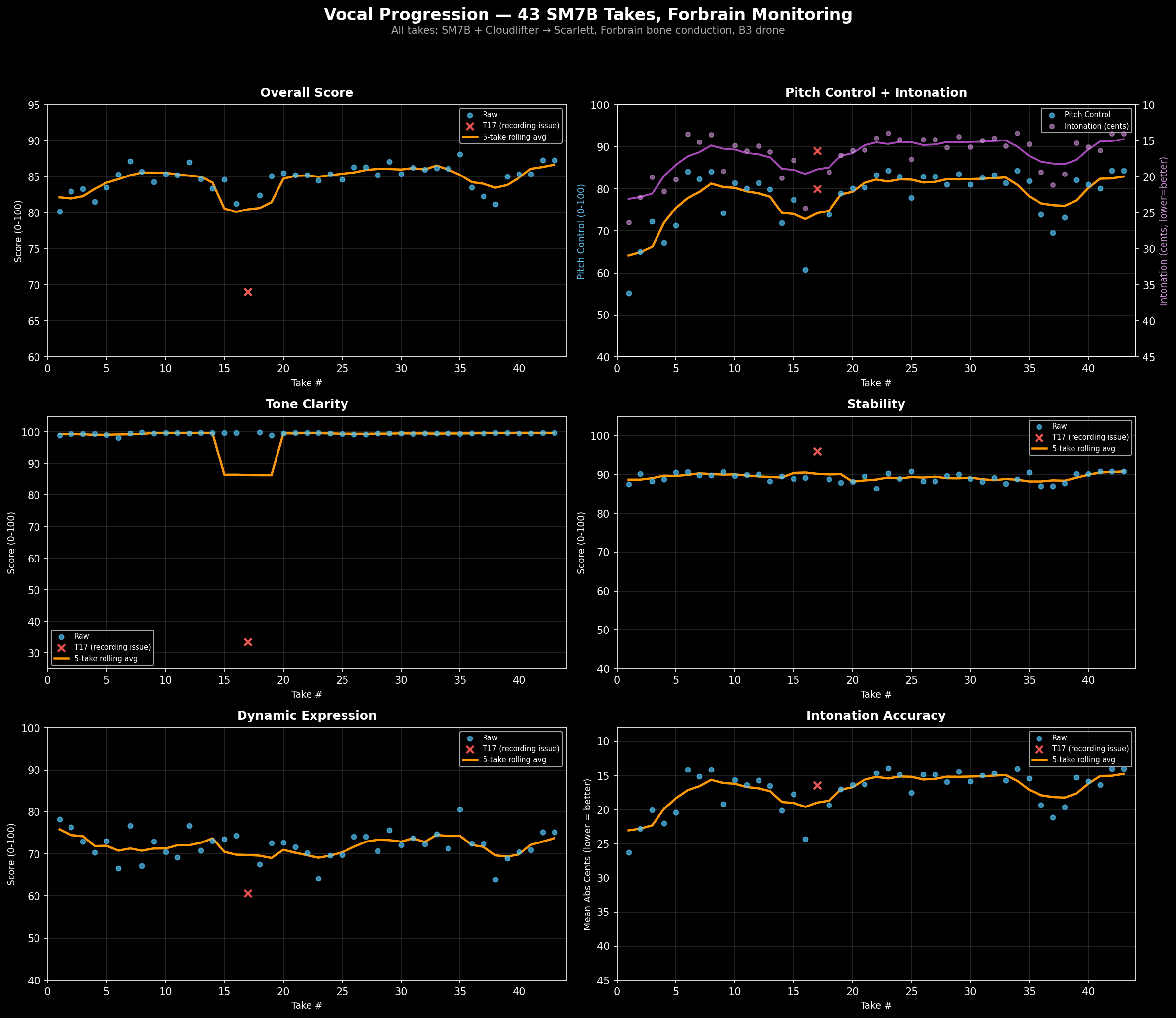

- 91.5 raw score → 92.8 tuned, 7.3c intonation, 168 analysis runs

- Stack

- Python, Praat, librosa, NumPy/SciPy, matplotlib

The Problem

Audiology-confirmed significant hearing loss in my right ear (~60%). When you can't reliably hear yourself sing, you can't trust your own judgment about pitch, tone, or quality. Vocal coaching helps, but between sessions there's no objective feedback loop. The ear doesn't heal. The tool has to compensate.

The Solution

A Python pipeline that analyzes vocal recordings at 44.1 kHz and produces high-precision metrics: pitch accuracy (cents deviation from target), formant structure (F1/F2/singer's formant ratio), tone clarity, jitter, shimmer, HNR, vibrato characteristics, and 70+ total measurements. Every take gets a composite score, a suite of diagnostic plots, and exportable CSV data.

Key Metrics Achieved

- 91.5 — best raw composite score (Take 19)

- 92.8 — best tuned composite score (Take 19 tuned)

- 7.3c — pitch intonation accuracy (tuned)

- 97.9 — tone clarity score (Take 24)

Progression

Architecture

The pipeline runs in three stages:

- Analysis —

vocal_take_analyzer.pyprocesses WAV files through Praat (pitch tracking, formant extraction, jitter/shimmer/HNR) and librosa (onset detection, spectral analysis). Outputs CSV metrics and diagnostic plots. - Composition —

vocal_comp_builder.pyselects the best segments across multiple takes and builds a composite. Section-level scoring ensures emotional continuity. - Cleanup —

vocal_comp_cleanup.pyhandles gap management (comfort noise fill), leveling, dehum, declick, and edge smoothing. Produces an Auto-Tune-ready output.

50 Numbered Discoveries

Over months of daily recording and analysis, the system surfaced 50 numbered technique discoveries — from monitoring as the single largest factor (#1), to the B3 drone breakthrough for pitch anchoring (#2), to consonant flicking (#39), formant sustains (#40), and mix pitch (#43). Each discovery was validated by measurable score improvement.

What This Demonstrates

- DSP engineering with real-time audio analysis

- Python scientific computing (NumPy, SciPy, Praat bindings)

- Data pipeline design with measurable outcomes

- Iterative optimization over 168 analysis runs

- Disability compensation through engineering

Get in Touch

This repo is private. Request a walkthrough or view all projects.